AI Exchange @ UVA [2025.4]

Our mission is simple: to keep the UVA community informed, engaged, and inspired as we navigate this transformation together.

Research Spotlight: Building Fair and Secure AI at UVA

AI Innovation in Computing and Data Science: David Evans, Professor of Computer Science and Olsen Bicentennial Professor of Engineering at UVA, and Chirag Agarwal, Assistant Professor at UVA’s School of Data Science, explore how we can design intelligent systems that are not only powerful — but transparent, ethical, and secure. Dr. Agarwal leads the Aikyam Lab, where his research focuses on building trustworthy, explainable, and fair AI systems. Dr. Evans brings decades of expertise in computer security, privacy, and trustworthy computing.

🔑 Key Insights

Trustworthy AI Balances Security and Fairness

David Evans emphasized that true AI trust goes beyond preventing attacks or data leaks. It’s about understanding what AI systems reveal, how they make decisions, and ensuring those decisions are fair and accountable. Security and fairness, he noted, must evolve together.

Explainability Strengthens AI Safety

Chirag Agarwal challenged the idea that explainability limits performance. His lab’s research shows that interpretable models can remain powerful while reducing vulnerability to adversarial attacks—proving that transparency can actually enhance safety and reliability.

Academia’s Edge: Depth Over Hype

Both guests agreed that universities like UVA play a vital role in shaping responsible AI. While industry rushes to scale models, academia can pause, ask deeper questions, and build benchmarks that expose hidden flaws—ensuring innovation stays aligned with ethics and human values.

👉 Key idea: Trustworthy AI depends on ensuring security, privacy, fairness, and explainability.

Research: Will We Design the Economy, or Will AI Do It for Us?

Brynjolfsson, E., Korinek, A., & Agrawal, A. (2025). A Research Agenda for the Economics of Transformative AI. NBER Working Paper No. 34256.

(Anton Korinek, UVA economist — and a repeat feature here for his work on using AI to develop research — is one of the authors.)

💡 The Big Idea

Transformative AI could be the economic event of the century — or the biggest policy failure in history. This paper is a call for economists to build the frameworks, data, and theory now, before the machines do it for us.

Their argument: economists can’t sit this one out. We need a new research agenda for what they call “Transformative AI” (TAI) — and fast.

🧭 Nine Grand Challenges

They outline nine fronts where economics has to catch up with technology:

Growth: How will TAI change the pace of productivity — and what new bottlenecks (like compute or energy) will emerge?

Innovation: Will AI turbocharge discovery across disciplines — or just reinforce existing silos?

Inequality: What happens when machines can do almost all work, and wages lose their anchor?

Power: Will decision-making concentrate in a handful of firms or countries?

Geopolitics: How will TAI reorder trade, alliances, and global security?

Information: Can truth survive when AI floods the world with convincing content?

Safety: What’s the right balance between economic growth and existential risk?

Meaning: If Keynes’ “economic problem” is solved, what gives life purpose?

Transition: How do we navigate the messy in-between — with labor shocks, retraining, and policy lagging behind?

🧪 Rethinking the economist’s toolkit

The authors don’t just pose questions — they sketch new methods too: growth models that handle explosive feedback loops, “TAI dashboards” to track productivity and substitution trends, new metrics for welfare when digital goods are free, and even AI-agent simulations that could test policies before they’re implemented in the real world.

Bridging the Translational Gap: Programmable Virtual Humans Offer a New Path for Drug Discovery

A team led by You Wu and Lei Xie (Northeastern University) with Philip E. Bourne (UVA) has unveiled a bold new idea: programmable virtual humans — AI-driven digital models that mimic real human biology.

The Problem: Most drugs that look promising in the lab fail in human trials — the dreaded translational gap.

The Innovation: Virtual humans integrate physics-based models, biological networks, and AI to simulate how drugs behave inside the body — from molecules to organs.

Why It Matters: This could mean faster, safer, and cheaper drug development — with fewer animal tests and more precise predictions.

The Vision: A future where scientists can test thousands of therapies virtually before any human trial begins.

AI by the Numbers

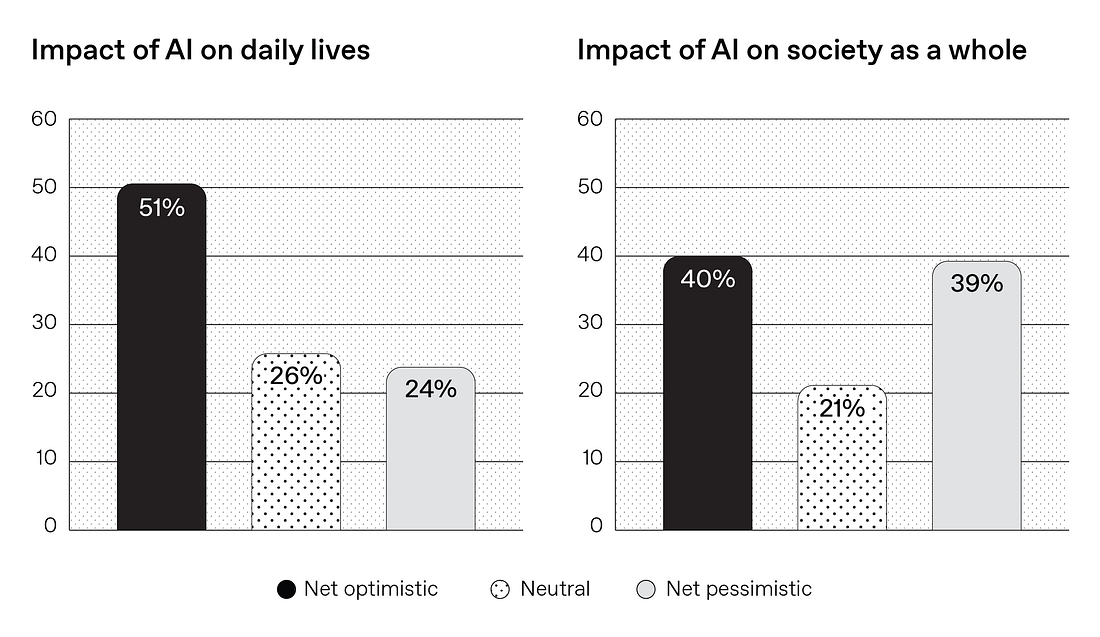

“AI’s Optimism Gap” (TrueDot Survey, Oct 2024)

Key Findings:

Personal vs. societal optimism:

51% are optimistic about AI improving their daily lives, while only 40% feel positive about AI’s broader social impact.

Roughly a quarter of respondents are neutral, and about a quarter are pessimistic in each category.

The gap reflects confidence in AI’s convenience and productivity benefits but doubt about whether it can be managed responsibly at scale.

Public sentiment themes:

Most Americans want AI-driven innovation in healthcare, education, and public services, yet remain worried about society losing control over AI systems.

As TrueDot CEO Jon Cohen puts it: people believe AI can “make their own lives faster and sharper,” but they’re “less sure it’ll make the world better or that we can manage it.”

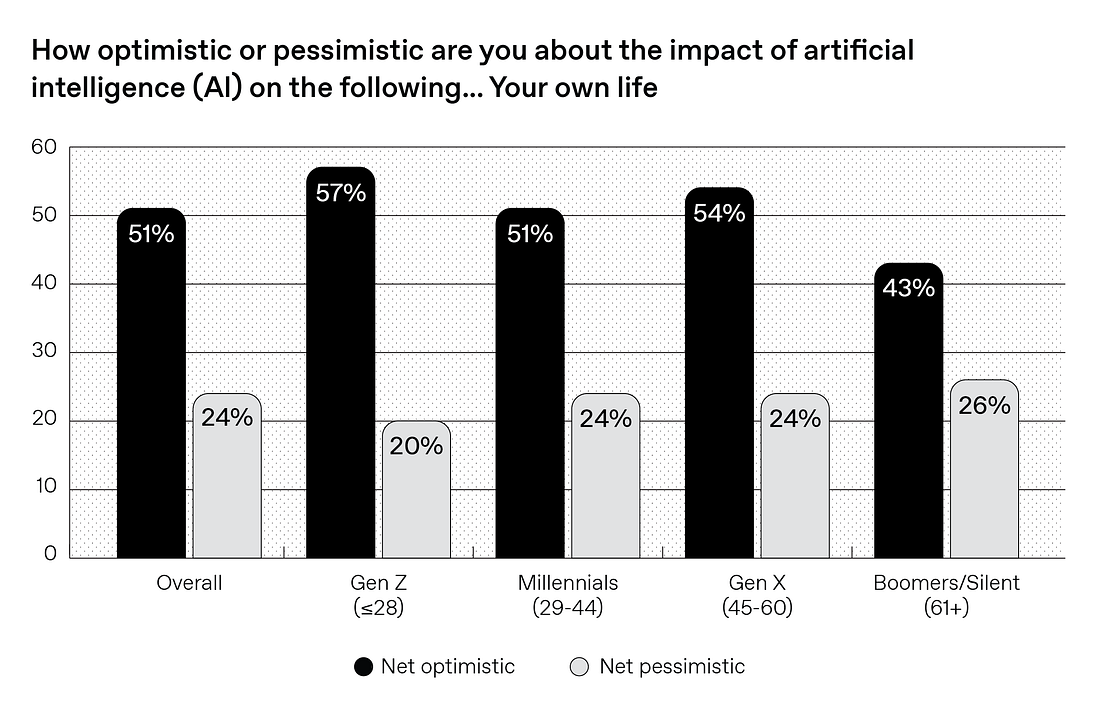

Generational Differences

Younger groups are more optimistic:

Gen Z (≤ 28 yrs): 57% optimistic

Millennials (29–44 yrs): 51%

Gen X (45–60 yrs): 54%

Boomers / Silent (61+ yrs): 43%

Millennials’ caution may stem from lingering economic scars from the Great Recession and skepticism toward tech companies—seeing AI as another potential financial or employment risk.

News Around Grounds

Capital One and UVA Engineering Announce Major Partnership to Advance AI Research and Education

Capital One and the University of Virginia School of Engineering and Applied Science today announced a transformative $4.5 million partnership to establish the Capital One AI Research Neighborhood and launch the Capital One Ph.D. Fellowship Awards. This collaboration will accelerate artificial intelligence research and education while helping to create the next generation of AI leaders in financial technology.

The partnership includes a $2 million investment to create the Capital One AI Research Neighborhood within UVA Engineering’s future Whitehead Road Engineering Academic Building, which will be matched by an additional $2 million from the University for a total facility investment of $4 million. An additional $500,000 will establish the Capital One Ph.D. Fellowship Awards program to support Ph.D. students conducting AI research.

Upcoming AI Events @ UVA

Artificial Intelligence & Human Resilience in Bruce Holsinger’s Culpability

🗓 November 13, 2025 | 3:00 PM–4:00 PM

Lifetime Learning is excited to feature Bruce Holsinger , author and UVA English Professor, for a dynamic discussion of Holsinger’s latest novel, Culpability. This gripping story explores the promises and dangers of AI through the lens of a family caught in a tragic accident. Oprah Winfrey called it “riveting until the very last shocking sentence!” Bruce will share insights into the novel, the role of AI in our lives, and how we navigate resilience in uncertain times.

Read the book before the event! Copies of Culpability are available online or in store at the UVA Bookstore.

Datapalooza : School of Data Science

🗓 November 14, 2025 | 1:00 PM–5:00 PM | School of Data Science (1919 Ivy Road)

Explore AI, data, and truth at Datapalooza 2025—UVA’s fall showcase of data science in action across fields and for the public good.

UVA AI Conference

🗓 December 5, 2025 | 8:00 AM–6:00 PM | Forum Hotel

Minding the Gap: How AI Drives Performance and What Limits Its Impact

On 5 December 2025, the UVA Conference on Ethical AI in Business will cut through the noise to explore these opportunities and challenges head-on.

AI Fellowship Opportunity

🗓 Application Due December 12, 2025

The UVA Darden LaCross Institute for Ethical Artificial Intelligence in Business (LaCross AI Institute) is requesting proposals for multidisciplinary research fellowships focused on ethical AI in business. The fellowships will cover faculty and student-centric research activities for a period of 12-24 months, up to a total of $100,000.

2026 Fellowships in AI Research (FAIR) Symposium

🗓 January 30, 2026 | Forum Hotel

Join us at The Forum Hotel for the Fellowships in AI Research (FAIR) Research Symposium. Hear from current fellows as they share their latest research and be among the first to meet the 2026 cohort.

Coming Soon: Faculty Voices and AI Research Toolkit

Interdisciplinary Paths to Responsible AI: Steven Johnson, a professor at the McIntire School of Commerce, studies how digital technologies and online communities influence people—especially youth—and how algorithms impact information and interaction. Tom Hartvigsen, an assistant professor at the School of Data Science, focuses on making AI systems in healthcare and other critical domains trustworthy, fair, and context-aware. Together, their work bridges business, data science, and ethics to improve how humans and AI interact.

Research Excellence Faculty Toolkit: AI research tools help faculty, staff, and students accelerate discovery by supporting data analysis, coding, visualization, literature reviews, and writing. When used responsibly, they enhance productivity, sharpen insights, and expand the scope of inquiry.

UVA AI Resources

AI for Academic Excellence - Student Toolkit: A comprehensive guide for students on the best uses of AI.

AI Agents in Economic Research (Anton Korinek): A guide for researchers on the use of AI agents.

UVA Claude Builders Student Club: A 250+ strong group for those interested in development via Claude.

UVA Podcasts We Listen to

UVA Data Points: Podcast from the School of Data Science.

HOOS in STEM: Showcases the marvelous cornucopia of STEM at UVA, from the latest innovations to growth inside and outside the classroom.

Thanks for reading AI @ UVA Substack! Subscribe for new posts and podcasts.